In a series of four, Senior Project Manager and 7N IT specialist, Lars Søndergaard, focuses attention on what challenges and possibilities he sees with data migration in the pharma industry. Besides management,

Lars possesses data warehouse and business intelligence as core competencies and has more than 20 years of experience within his field. He has gained a lot of his experience within the pharma industry, the latest from an assignment at LEO Pharma as a Project Manager and Data Governance Consultant.

How hard can it be?

The migration of data from a legacy system to a new system seems to be a trivial task to many. Unfortunately, that is not the case. Many projects experience significant delays, cost increases, or failures due to the inherent complexities of data migration.

Data quality

Legacy systems hold data from years back, and the data itself is often a result of earlier data migrations. Over time business processes have varied and the data with them.

As a result, migration rules will be complex and require skilled data analytics to identify data quality issues and advise the business on how to solve them best.

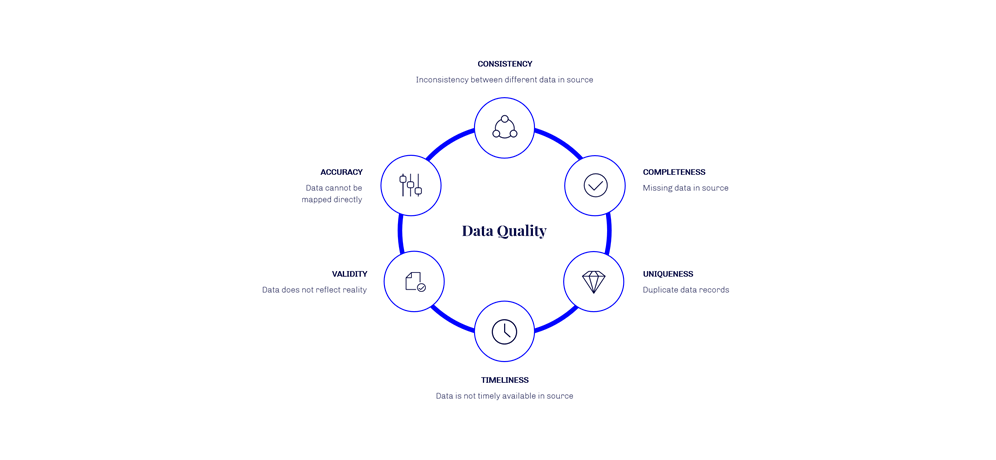

The following dimensions for data quality should be considered:

Data quality is one of the major factors for increasing cost and reducing the value of the data migration product.

Often data quality issues are identified when analyzing the rules for migrating data from source to target system. In agile refinements, the migrations are done sprint by sprint, with the effect that critical data quality issues might be identified late in the process.

Data profiling should be part of the data migration process throughout the whole project, and if possible, broader data profiling should be considered early in a project.

It is important to take data profiling beyond the SQL workbench.

- Azure and AWS have complete tool offerings. Algorithms to, e.g., identify duplicates in location, person, or organization data sets, can be found on the internet.

- The use of modern technologies, such as advanced parsing and Natural Language processing, can be considered to detect data patterns that do not match industry standards such as IDMP and XEVMPD.

The skills required for this are generally not available in the company resource pool, and it can be considered to seek external assistance or to hire resources with the required skills.

Data governance

Data governance-related issues will typically impede the data migration process. If handled with a data governance perspective, it is an excellent opportunity to establish business processes to maintain good data quality across systems.

When migrating master data, it is crucial that legacy values are mapped to approved/governed data sets. If not, the data migration will introduce data quality issues instead of solving them.

- The data migration approach for specific master data areas needs to be reviewed by available data management functions.

- If data management is not established, the data migration team should initiate/push for the required coordination between business areas to ensure high-quality master data going forward.

The easy decision of migrating all legacy values into, e.g., a product table to enable a smooth data migration should be made with care.

- If legacy values are migrated, they need to be inactivated after migration to avoid that they are used going forward.

- If legacy values are mapped to new product structures, transactional data needs to be mapped to these new product structures as well.

As mentioned, Veeva is popular in the Pharma industry, and Veeva has realized that cross-vault integrations are required to increase efficiency in business processes. However, these integrations are still immature but will be improved in the coming years.

Compliance

Within the Pharma industry, compliance is alpha and omega. This is also true for data migrations.

The data migration will need to follow GAMP-5 principles, which equals that there will be specific Standard Operating Procedures and Work Instructions to follow.

An important aspect is to support Business and IT stakeholders in case of compliance inspections at a later stage. An inspector will likely focus specifically on data migration aspects.

Hence relevant information about data migration plans, risk assessments, data migration summary reports, etc., needs to be included in the inspection binder instead of only being treated as a one-time compliance product to enable approval of the data migration.

Audit trails

Legacy audit trail information is a key data area, and it is mandatory to migrate the legacy audit trail for GxP data. Inspections typically require that it is possible to see historical changes to GxP data.

The audit trail is unique in the sense that it is a technical component of the source system and the target system. Thus, it is not possible to migrate a source system audit trail directly to a Veeva Audit trail. Veeva does not allow it.

There are three options. It is difficult to advise on the best solution, but the options should be explored upfront. There are:

1. Maintain a version of the legacy system (or just its data) to enable audit trail lookups.

2. Create a Veeva Audit trail data object to hold the legacy audit trail information.

3. Migrate the legacy audit trail as searchable PDF objects and insert them as Veeva attachments.

Open workflows

The Veeva vaults are workflow-oriented, and the workflow controls the state of data within the data objects. The workflows are quite complex. One state change can trigger actions on many other data objects.

The workflow and the state can normally only be manipulated via user actions, which increases the data migration complexity.

When migrating legacy data, most of the data will be historical in the sense that transactions are completed or closed.

The open legacy workflows, where data are in progress, need to be addressed with Veeva upfront.

- Is it possible to migrate legacy data in progress to the corresponding target workflow state?

- If yes

- How is it done?

- What are the performance aspects when migrating? - If no

- Why is it not advised to create in-progress workflows?

- Even though the complexity (cost) of creating in-progress workflows can be high, the alternatives are:

-> To ask the business to complete all in-progress workflows in the legacy system before migrating, or

-> To migrate all open workflows to the initial state in Veeva and then ask the business to move these workflows into the correct state.

All options should be clarified upfront, and stakeholders should be involved in choosing the right option.

A moving target

Due to the complexities, the sheer size of the data migration task, and the compliance aspect, the delivery of data migration can easily be 6 to 12 months or even longer for large vaults.

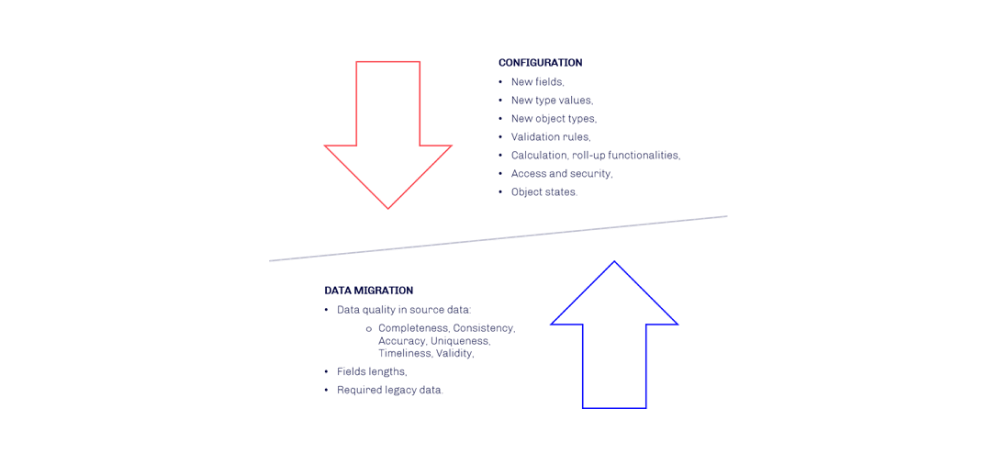

To optimize the delivery of the final solution to the business, the configuration of the vault is typically planned to be done in parallel with the data migration and typically under an agile regime.

From a data migration perspective, this means that the target data model is constantly changing, leading to rework.

Also, seeing a configured part of the vault with migrated data for the first time often leads to configurational changes.

Interested in more?

Read the other parts of this article by following the links!